Long-Range Attack Towards Depth Estimation based Obstacle Avoidance in Autonomous Systems

@inproceedings{zhou2022doublestar,

title={DoubleStar: Long-Range Attack Towards Depth Estimation based Obstacle Avoidance in Autonomous Systems},

author={Zhou, Ce and Yan, Qiben and Shi, Yan and Sun, Lichao},

booktitle={USENIX Security Symposium},

month={August},

year={2022}

}

DoubleStar is a long-range attack towards stereo cameras (a.k.a., 3D depth camera) that injects fake obstacle depth by projecting pure light from two complementary light sources. We successfully attack two commercial stereo cameras designed for autonomous systems (ZED and Intel RealSense D415). The visualization of fake depth perceived by the stereo cameras illustrates the false stereo matching induced by DoubleStar. We use Ardupilot to simulate the attack and demonstrate its impact on drones. To validate the attack on real autonomous systems, we perform a real-world attack towards a commercial drone (DJI Phantom 4 Pro V2). Our attack can bring a flying drone to a sudden stop or drift it away from its original path, and it can also cause false alarms over a long distance under various lighting conditions. our experimental results show that DoubleStar creates fake depth up to 15 meters in distance at night and up to 8 meters during the daytime. DoubleStar attack may cause severe accidents, even loss of lives, if we blindly trust the obstacle avoidance capability of autonomous systems.

Target Devices in the Experiment

Team

DoubleStar was discovered by the following team of academic researchers:

- Ce Zhou at SEIT Lab, Michigan State University

- Qiben Yan at SEIT Lab, Michigan State University

- Yan Shi at Michigan State University

- Lichao Sun at Lehigh University

Contact us at fakedepth2021@gmail.com

Features of DoubleStar

-

How does DoubleStar work?

DoubleStar is the first black-box attack towards stereo vision-based depth estimation algorithms. An attacker can inject two light sources separately into each len of the stereo camera. The injected light source will become brighter and more prominent in one camera than the other. Since stereo matching tries to find the pixels in images corresponding to the same point, it recognizes the injected strong light sources in left and right images as the same object, resulting in a faked object depth.

What devices can be compromised by DoubleStar?We validated successful DoubleStar attacks on the two commercial stereo cameras designed for robotic vehicles and drones (ZED and Intel RealSense D415) and one of the most advanced drones (DJI Phantom 4 Pro V2) in different ambient light conditions. We believe more devices equipped with stereo camera could be vulnerable.

Does DoubleStar work for state-of-the-art AI-based depth estimation algorithms?Yes, DoubleStar not only works for classic stereo depth estimation algorithms, e.g., block matching (BM) and semi global block matching (SGBM), but it also works for the state-of-the-art stereo vision based depth estimation algorithms. We successfully verified our attack towards DispNet, PSMNet, and AANet.

What can the attackers do? - ⚑ Drift the drone away from its original path.

- ⚑ Suddenly stop a moving drone.

- ⚑ Shake the drone dramatically.

- ★ Detect over-saturated pixels in the stereo image pair.

- ★ Add camera lens hood to reduce the lens flare effect.

- ★ Use sensor fusion technique to fuse different types of data.

- ★ Build robust deep neural networks.

How do you defend against DoubleStar?

DoubleStar Attack Demonstration

Drift Away and Shake Flying Drone (7m)

More Attack Scenarios

Stop Flying Drone in the Day (6m)

Stop Flying Drone in the Day (7m)

Stop Flying Drone at Night (6m)

Stop Flying Drone at Night (7m)

Different Attack Patterns

Attack patterns have significant effects on fake depth. The video shows an example that X-shape attacks could produce near-distance fake depths, while trapezoid-shape attack could create far-distance fake depths.

Drone Attack Verification

Aiming the Drone in Real-Time Attack

Since the minor change of the lens angle can cause a significant light coverage difference on the victim side, the attacker can easily track the flying drone by continuously controlling the aiming angle of the taped lens. As long as the projection light is injected to the stereo camera, DoubleStar can always succeed.

Simulation

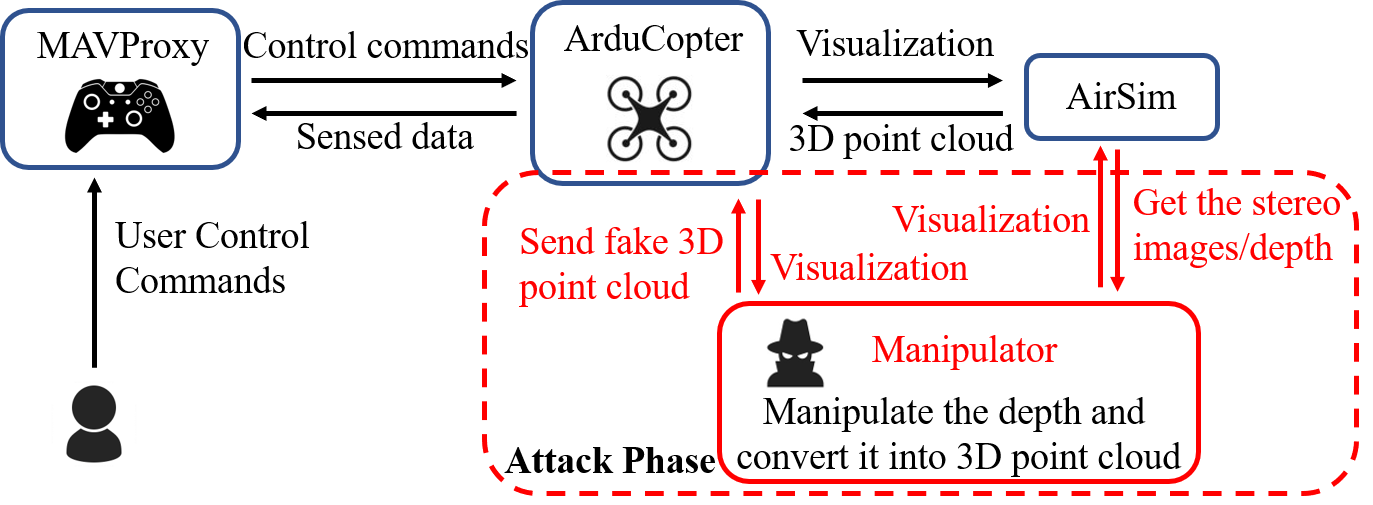

Simulation Workflow of DoubleStar

We simulate DoubleStar drone attacks using Ardupilot and AirSim. Ardupilot is used for simulating MAVProxy as the ground station, and ArduCopter as the drone. AirSim provides the sensor inputs to the ArduCopter. we design a depth manipulator and embed it between ArduCopter and AirSim. By designing different fake obstacle depths in a realistic environment, we successfully demonstrate that our attack can achieve real-time drone control.

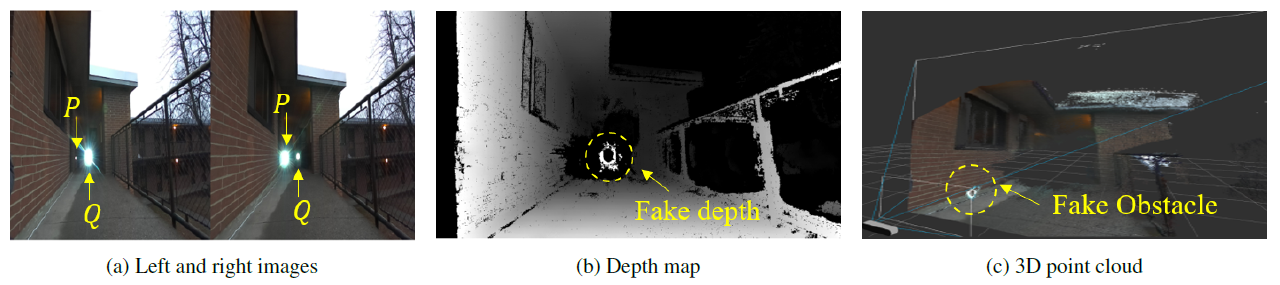

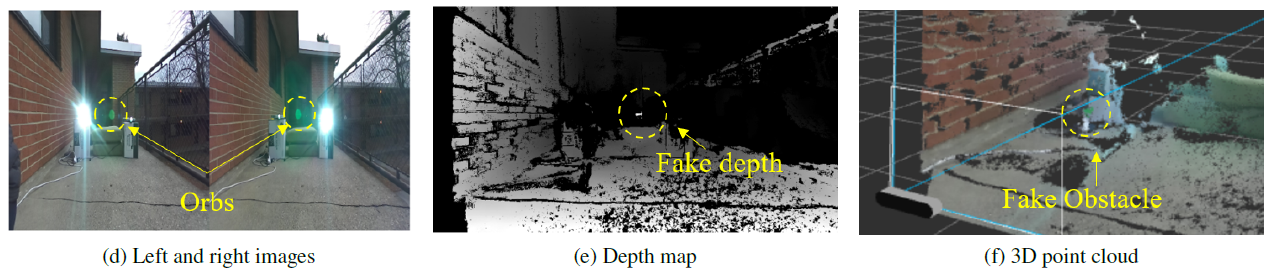

Attack Visualization on Stereo Cameras

DoubleStar includes two distinctive attack formats: beams attack and orbs attack, which leverage projected light beams and lens flare orbs respectively to cause false depth perception. We can visualize the two attacks through the ZED and Intel RealSense D415 stereo cameras.

Beams Attack on ZED

An attacker injects the light beams with the same projection intensity using two projectors (i.e., P and Q) into the right and left camera, respectively. It can be observed that one of the injected light sources is brighter than the other one when received by the camera, which becomes a more prominent feature in the image. Such phenomenon causes the depth estimation algorithms to mismatch the two highlighted light sources in the images as the same object due to the weakness in depth perception. As a result, a fake near-distance depth is created in the depth map and visualized in the 3D point cloud.

Orbs Attack on ZED

Two lens flare orbs generated by the strong light sources in the stereo images can mislead the depth perception to falsely identify them as a 3D obstacle.

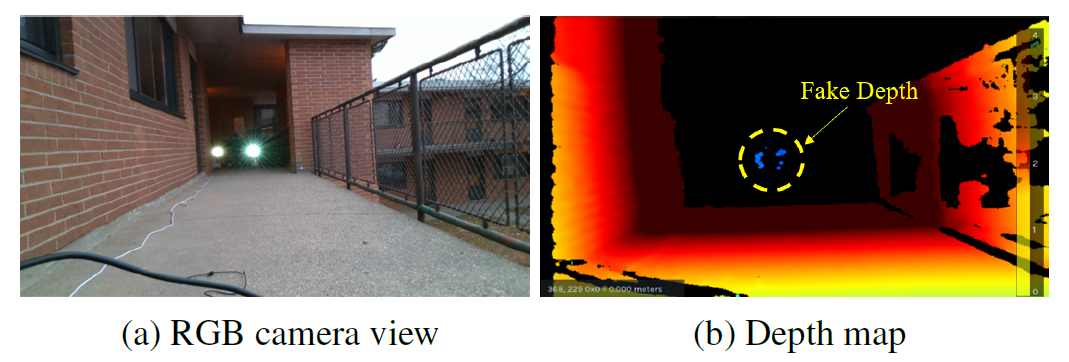

Attack Validation on RealSense

A near-distance fake depth (around 0.5m) can be generated when the attack distance is 10m in RealSense. It demonstrates that DoubleStar remains effective even without the access to the camera's left and right images.